You might think it must be easy to define randomness, but nothing could be further from the truth. Not only is it difficult to create random events or sequences of numbers, verifying whether something that we have produced really is random is no easy task either. Many great mathematicians throughout history have examined the problem of randomness, but it was only a short while ago, in the era of computers and information technology, that the questions concerning randomness revealed themselves in all their complexity and appeal.

The paradox of the definition of randomness

It would be easiest to define randomness as a series of events taking place without any meaning or independent of any possible rule. Random is what has neither cause nor meaning. Nevertheless, it is very important not to confuse our subjective unawareness of rules with the objective nonexistence of such laws. We can quite easily come to the conclusion that a certain sequence of numbers is random when we cannot recognize any rule that might govern it, while it is likely that we just cannot make out the pattern. A good example of this is the number pi which represents the ratio of a circle’s circumference to its diameter. The definition of pi is very simple, but if we were to see nothing but the long list of decimals with the beginning 3.1415… hidden, it would be extremely difficult to figure out that it is, in fact, a sequence which is far from random.

One could even say that the definition of randomness is, in a way, a paradox. On the one hand, we say that a truly random sequence cannot conceal any rule that would enable us to recreate the sequence, while on the other hand, requiring the absence of all patterns within a sequence leads to a very restricting definition which is almost impossible to apply in practice. For something to be random, it must meet very well defined conditions. Randomness is thus defined by the complete absence of form which is, on the other hand, a very strictly defined form in itself, only with a negative sign.

The shortest possible instruction

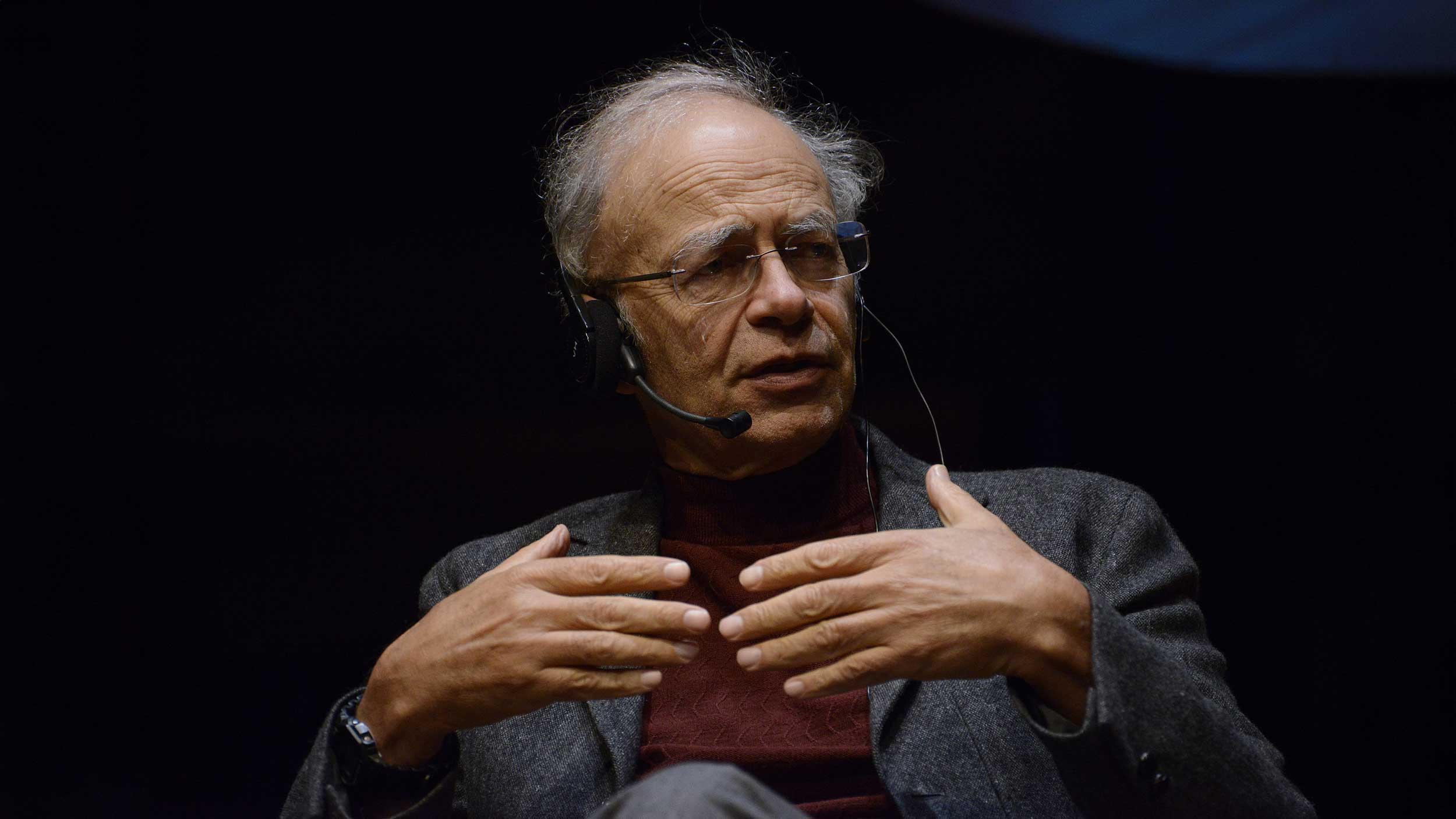

In the mid-1960s, the mathematicians Andrej Kolmogorov, Gregory Chaitin and Ray Solomonoff all independently invented a way to effectively define randomness in the era of computers and the digital recording of information. They linked the definition of randomness to the concept of algorithmic complexity. This sounds very complicated, but is actually based on a simple idea.

Andrej Kolmogorov, a renowned Russian mathematician, defines the complexity of a thing as the length of the shortest recipe (algorithm) required to make it. Cakes are usually more complex than bread, because the instructions for making them are normally more extensive. Similarly, orange juice is less complex than beer, for example, as we can reduce the recipe for making the juice to no more than two words: “Squeeze oranges”, while the instructions for making beer are much longer.

Following the same pattern, the complexity of a number is defined as the length of the most simple computer program that can write it out. The sequence 01010101010101010101 can be reduced to “10 times 01”. We notice immediately that the sequence can be memorized in a shorter and clearer way. When observing a sequence like 01000101000011101001, however, it is not possible to recognize the rule right away, so we have to memorize it as a whole.

If your phone number happened to be 01 1111-111, you would be able to memorize it straight away, just as you would the number 01 2345-678. The rules hidden behind both numbers are simple and we need no more than a single piece of information to recall them. When it comes to more complex numbers, though, we have to commit several pieces of information to our memories. Sometimes a part of the number contains the date of our birth or a similar sequence that we can easily recall, so memorizing such numbers presents a smaller difficulty than memorizing numbers that possess no apparent pattern. These are, in our eyes at least, completely random.

No recipe for randomness

We can use the concept of algorithmic complexity to define what is random as being a thing for which there exists no shorter recipe than a detailed description of the thing itself. If we limit ourselves to number sequences, then the sequence which is random cannot be reduced to any shorter form or algorithm than the entire actual list of numbers. As there is no rule to sum up the list of numbers, we can only memorize it in its entirety, like a phone number which does not contain a single set of numbers we can recognize.

A binary number, used in the language of computers that can only read ones and zeros, is random when its complexity is equal to the number of its digits. A program that a computer reads in binary code as well cannot be shorter than the number itself. In simpler terms, there is no shorter recipe to write down the number than writing it with all the digits it contains. There can be no other shorter algorithm or program.

All programs for creating random numbers built into our computers are actually only creating the so-called “pseudo-random numbers”. The algorithms that create them are shorter than the numbers they produce, so they do not really meet the strict criterion of randomness based on algorithmic complexity. In an article in 1951, John Von Neumann, the famous mathematician, physicist and father of computer science, summarized this problem in a single sentence: “Anyone who considers arithmetical methods of producing random digits is, of course, in a state of sin.”

The “Monte Carlo” method

However, modern science could hardly progress without generators of (pseudo-) random numbers. Today, random numbers are most frequently used to enable simulations or to help with more complex calculations. The method of using random numbers to solve mathematical problems is very similar to public opinion polls. If we pose a certain question to a smaller sample of population and if the sample only contains randomly chosen representatives of a population that are not, for example, mostly senior citizens or students, their answers can give us a pretty good idea about the population’s opinion concerning a certain subject.

In science, we can deal with the problem of random numbers in a similar way. Suppose we had to calculate the surface of an irregular shape in the form of a heart. Using a procedure that mathematicians named the Monte Carlo method we can evaluate the surface of the heart by circumscribing it with a rectangle, the surface of which is not difficult to calculate. Now, all there is left to do is to evaluate what part of the rectangle is covered by the heart and the problem is solved. But what is the easiest way to assess the ratio of the surface of the entire rectangle to the area covered by the heart? Try randomly placing dots on the rectangle and counting whether you have hit the heart or not. If the dots are really distributed in a random manner, the ratio of the number of dots inside the entire rectangle to the number of dots on the heart will gradually approach the ratio of the rectangle’s surface to the heart’s surface.

Compressing data

Naturally, verifying whether a long sequence of numbers is random is no easy feat. However, mathematicians have developed several methods for testing different random number generators and checking if the random sequences are good enough to be used for a given task.

In our daily lives, we encounter the process of evaluating the degree of randomness when compressing files on our computers which happens almost every day. As we all know, we can use special software devised to compress data and greatly reduce the size of a file on our computer. These file compressing programs look for repetitions within data and create new dictionaries to write down the information in shorter form. Suppose we repeatedly used the word “problem” in our document. A good file compressor will notice that and substitute all the occurrences of the word with a single sign like *. The sentence: “The problem lies in the problematical approaches to solving problems”, will be written in a coded and compressed form: “The * lies in the atical approaches to solving *s”. And the dictionary will add the explanation that the asterisk () means “problem”.

The following rule applies: the more that the size of the file is reduced by compressing information, the less random data is being compressed. When compressing a written document it is thus easy to evaluate the size of our vocabulary. The more we repeat words in our text, the easier it will be for the file compressor to reduce the size of the file containing our document.

This is why file compressing programs are a relatively good judge of the randomness of a certain sequence. The more the data can be compressed, the less random it is. A truly random sequence cannot be compressed by using file compressing programs because the entire computer algorithm is also the shortest possible algorithm describing this sequence. A file of a truly random sequence cannot be compressed.